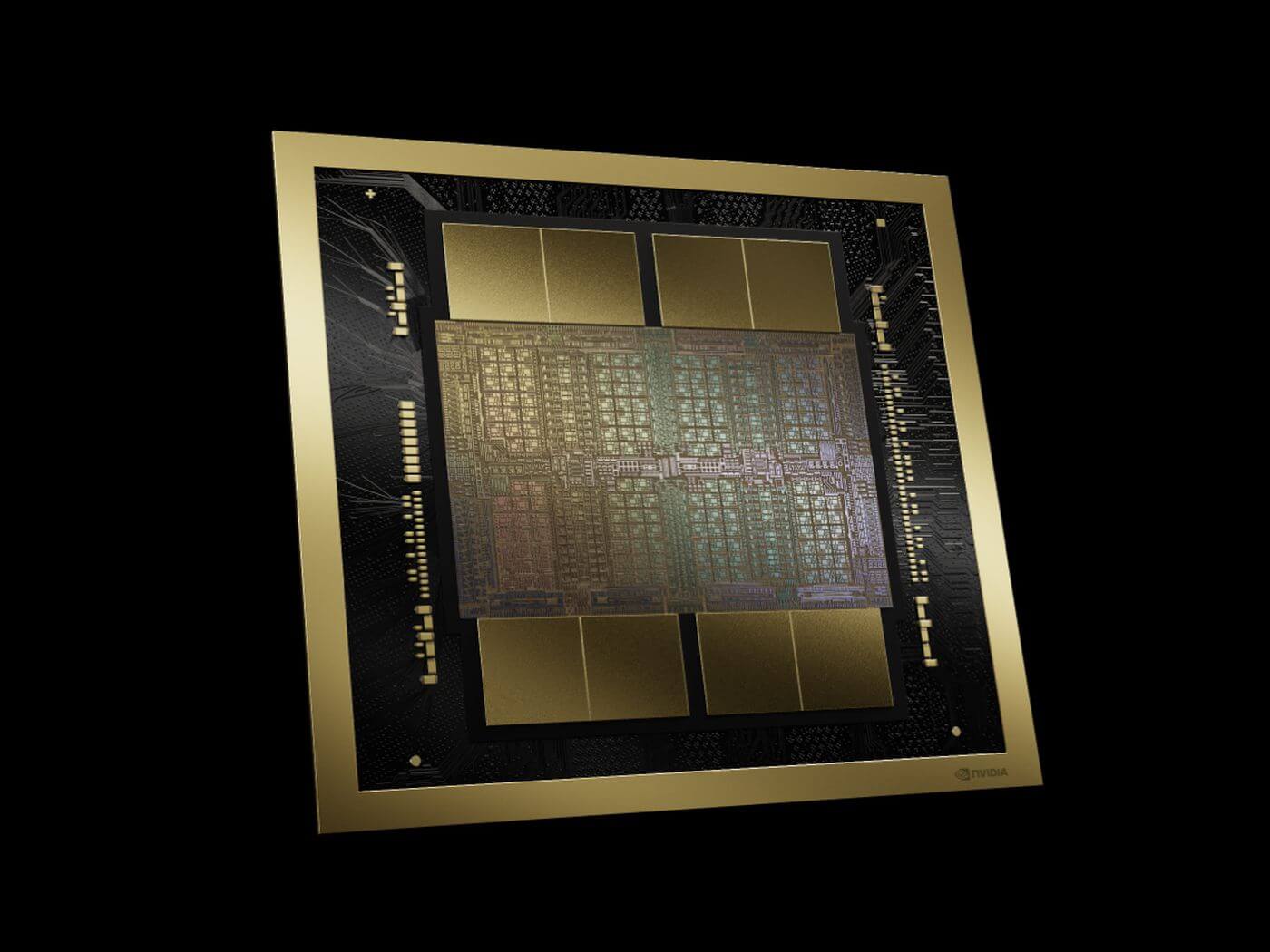

Nvidia’s CEO, Jensen Huang, has announced new AI chips and software for running next gen AI models. The new AI graphics processors are named Blackwell and are expected to start shipping out later this year.

The announcement came at Nvidia’s developer conference in San Jose as the chipmaker seeks to solidify its market position as the leading hardware provider for AI applications. The first Blackwell chip has been named, GB200, which will allow for much larger GPU’s when compared to Nvidia’s ‘Hopper’ H100 chips. The GB200 features two B200 graphics processors and one Arm-based central processor, this will allow for a massive performance upgrade for AI companies with 20 petaflops in AI performance versus 4 petaflops for the H100. This is definitely a huge step in the right direction for Nvidia continuing to earn its colossal valuation.

Available As An Entire Server

Introducing NIM

Leave a Comment Cancel Reply

You must be logged in to post a comment.